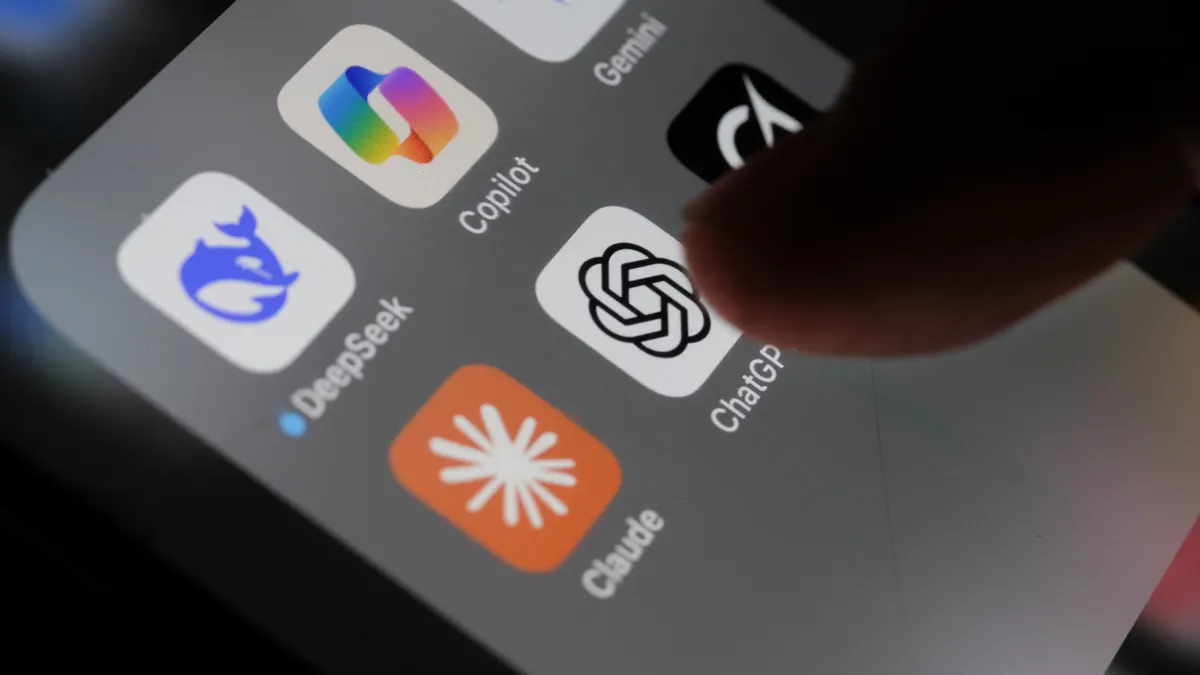

A cruise around the expo floor at last month’s International Association of Chiefs of Police conference in Denver revealed AI at every turn. From surveillance systems and facial recognition tools to software that writes police reports and triages 911 calls, the exhibits made clear that artificial intelligence can be deeply embedded in modern policing.

During the conference, Oracle unveiled a platform that uses AI-driven analytics and voice controls to automate police reporting and, it says, improve first responder decision-making. Also on display: a digital evidence management system designed to accelerate investigations, an AI desk officer that reportedly can handle up to 1,000 non-emergency calls at a time and take reports in 25 languages, a chatbot that aims to improve suspicious activity reporting and a video analysis program that says it transforms body camera data into reports in seconds. Booth after booth showcased AI tools for nearly every aspect of police work — even officer wellness.

“A couple years ago, the question was, do we fight AI or do we work with it?” said Doug Kazensky, a former training sergeant at the Longview, Wash., police department, now senior solutions engineer at Vector Solutions, who was at the show. “Well, guess what? It’s coming, and it isn’t stopping anytime soon.”

This year’s Public Safety Trends Report, a national survey of first responders, found that 90% of survey respondents support their agencies using AI, a 55% increase over last year’s survey. The majority — 88% — said they trust that their agencies will use the technology responsibly, a 29% increase since last year.

“We’re not going to gamble with personal liberty”

AI solutions are intended to make law enforcement more effective and efficient, Kazensky said, “and those are noble things.”

“But sometimes,” he added, “there are unintended consequences.”

Just days before vendors and law enforcement officers converged in Denver, California Gov. Gavin Newsom, D, signed a law designed to start addressing those potential consequences. SB 524 requires law enforcement agencies to disclose whether AI was used to generate any part of official reports and maintain an audit trail for as long as the report is retained. In March, Utah Gov. Spencer Cox, R, signed a similar bill.

“We’re not going to gamble with personal liberty,” California State Sen. Jesse Arreguín, who wrote SB 524, said in a statement. “AI hallucinations happen at significant rates, and what goes in a police report can influence whether or not the state takes away someone’s freedom.”

AI hallucinations — false or misleading outputs delivered as facts — are a threat not only to individuals’ freedom but to the public’s trust in law enforcement, Kazensky said. To keep these at bay, agencies need to vet AI tools as diligently as they would officer recruits and put procedures in place to ensure officers are checking their output.

AI tools trained on internal data are safer than those that scoop up data from the internet, Kazensky said. He called the latter a quagmire of disinformation that can lead to hallucinations.

He’s also concerned about data captured by a video camera, but not witnessed by an officer, being entered into reports. “It’s not that it didn’t happen,” Kazensky said. “It’s just a question of, if I didn’t see it, should it be in my police report?”

Law enforcement agencies must be open and transparent about how and why they’re using AI, Kazensky said. Local government leaders can help ensure that happens.

“There is a part for city and county government leaders to play in getting law enforcement agencies tools that improve their processes, but they need to do it in a way that’s open and fair and communicative with everyone,” he said. “Not everyone’s going to love it. There’s always going to be some contingency that says absolutely not. So, you have to listen to as many voices and address as many concerns as you can.”