Editor's note: This article was originally published in American City & County, which has merged with Smart Cities Dive to bring you expanded coverage of city innovation and local government. For the latest in smart city news, explore Smart Cities Dive or sign up for our newsletter.

In the evolving world of artificial intelligence (AI), data quality is paramount. Large language model (LLM) performance and trustworthiness rely on the richness and accuracy of data. However, many local governments struggle to adopt advanced technology simply because their data isn't ready.

Organizations are overwhelmed with large amounts of data from different sources, making data management a common challenge for AI development. As organizations and chief data officers (CDOs) continue to integrate these technologies, there needs to be a plan for identifying and standardizing data integrity throughout the extraction process, ensuring that cleaned data is optimized for AI consumption.

Understanding decentralized data

Decentralized data is an ongoing challenge for city and county governments. Decades of established practices have resulted in outdated governance protocols for data synchronization, with each organization often operating in its own silo, collecting and managing data independently. This lack of standardization contributes to data inconsistencies and quality issues. No single agency possesses a complete picture of individuals interacting with the government, and new applications often exacerbate the problem instead of addressing it.

Data across local government also resides in diverse technologies and formats, such as mainframes or web applications, further complicating integration and analysis. This heterogeneity makes it difficult to create a cohesive data environment for AI, which thrives on robust datasets to run seamlessly. When data is scattered across disparate systems, it limits the insights AI can generate and hinders its ability to drive actionable insights. It's like trying to assemble furniture with only half the instructions — you won't get the desired result.

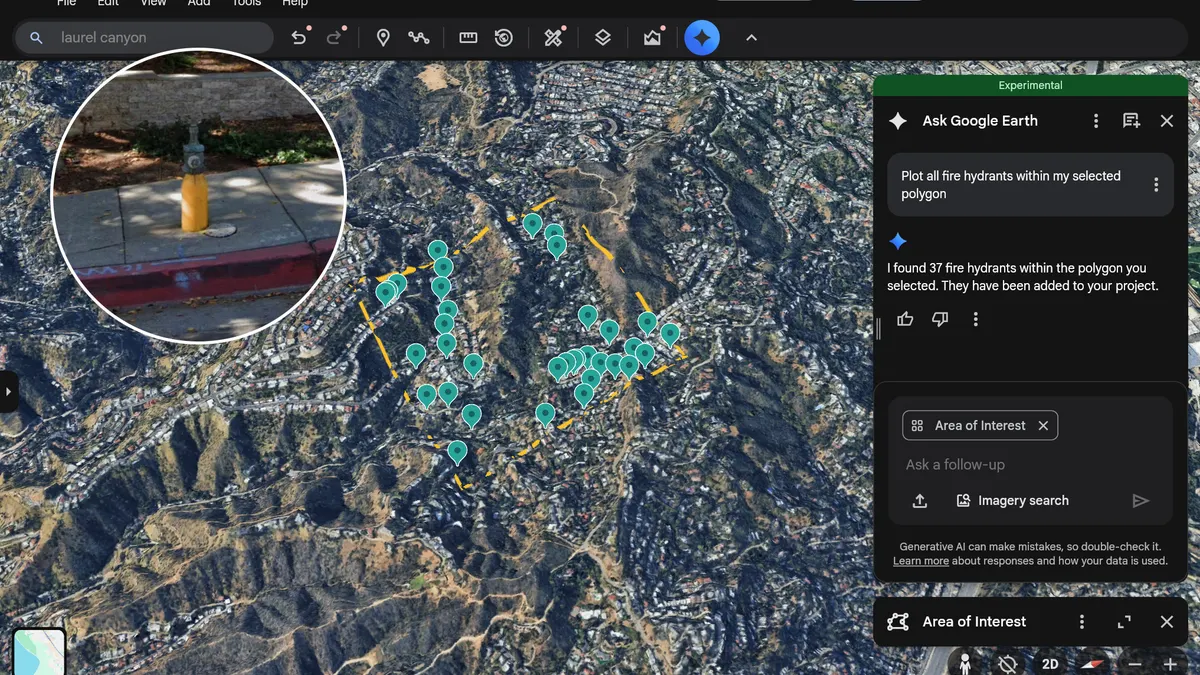

Today, many organizations overcome these challenges by investing in solutions to help parse through vast amounts of structured and unstructured data formats. Cloud service providers offer sophisticated data preparation tools designed for innovative data extraction in AI applications. These tools employ advanced algorithms to identify and extract relevant data from diverse sources, reducing overall processing time and computational costs.

Navigating the complexities of data quality

Data quality is another major stumbling block. Even if data is centralized, inconsistencies, errors and outdated information can render it useless for AI applications and lead to inaccurate predictions and biased outcomes.

To address these issues, organizations can automate data cleansing with innovative tools that eliminate duplicates and irrelevant information, correct errors and inconsistencies, and identify missing values through intelligent imputation. Implementing data cleansing solutions before feeding your data into AI large language models yields several benefits. Properly cleansed data leads to more precise and reliable AI outputs, faster AI model training and deployment and increased confidence among users and stakeholders.

Moreover, maintaining data quality over time requires ongoing effort. Establishing and adhering to data quality standards is essential. This is where CDOs play a crucial role, ensuring that data remains clean, consistent and reliable.

The bedrock of any successful AI implementation, data quality standards provide a framework for data governance, ensuring that data is collected, stored and used consistently across all agencies. Similar to the framing of a sturdy family home, well-defined data quality standards provide the foundational structure for AI applications.

Enforcing these standards requires a cultural shift within local agencies, where data quality is prioritized and everyone understands their role in maintaining it. Regular audits and data quality assessments can help ensure compliance and identify areas for improvement.

Exploring solutions for data challenges

As AI's influence grows, proper data preparation is not just a technical requirement, but a key strategic move. Cloud service providers are at the forefront of this dynamic industry, enabling companies to fully leverage AI's capabilities through clean, high-quality data.

Additionally, cloud-based data platforms offer a solution for managing and integrating data from diverse sources. They provide the scalability, flexibility and security needed to handle the ever-growing volume and complexity of data. By embracing these solutions, local governments can pave the way for successful AI adoption and unlock its transformative potential.

Commentary is a space for state and local government leaders to share best practices that provide value to their peers. Email Smart Cities Dive to submit a piece for consideration, and view past commentaries here.

About the Author

Sarjoo Shah is industry executive director for state and local government at Oracle. He is a business and information technology leader with more than 30 years of experience in public and private sector leadership and executive roles. His expertise in building and leading teams with focus on innovation and customer service are proven from his roles as the HHS CIO for the state of Oklahoma, Deloitte, SAIC and NTTDATA. Shah held various other positions, including state CTO, PMO director and treasurer within the executive committee of the American Public Human Services Association IT Solutions Management (APHSA-ISM), for several years.